Efficient Data Collection for Robotic Manipulation

via Compositional Generalization

We study compositional generalization with visual imitation learning policies for robotic manipulation. We find that policies exhibit significant compositional abilities, which can be exploited for more efficient strategies for in-domain robotic data collection. Importantly, we find that leveraging large-scale prior robotic datasets is critical for facilitating composition.

Abstract

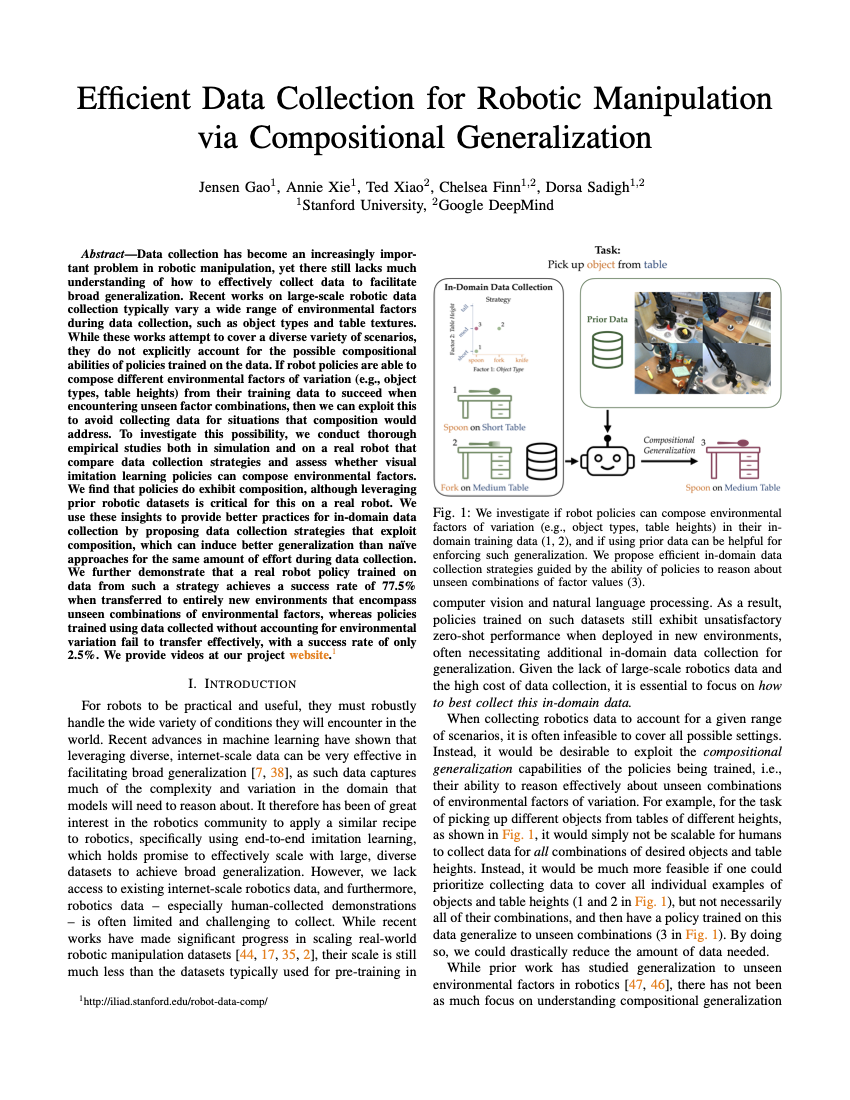

Data collection has become an increasingly important problem in robotic manipulation, yet there still lacks much understanding of how to effectively collect data to facilitate broad generalization. Recent works on large-scale robotic data collection typically vary many environmental factors of variation (e.g., object types, table textures) during data collection, to cover a diverse range of scenarios. However, they do not explicitly account for the possible compositional abilities of policies trained on the data. If robot policies can compose environmental factors from their data to succeed when encountering unseen factor combinations, we can exploit this to avoid collecting data for situations that composition would address. To investigate this possibility, we conduct thorough empirical studies both in simulation and on a real robot that compare data collection strategies and assess whether visual imitation learning policies can compose environmental factors. We find that policies do exhibit composition, although leveraging prior robotic datasets is critical for this on a real robot. We use these insights to propose better in-domain data collection strategies that exploit composition, which can induce better generalization than naive approaches for the same amount of effort during data collection. We further demonstrate that a real robot policy trained on data from such a strategy achieves a success rate of 77.5% when transferred to entirely new environments that encompass unseen combinations of environmental factors, whereas policies trained using data collected without accounting for environmental variation fail to transfer effectively, with a success rate of only 2.5%.

Video

Real Robot Evaluation

We conduct experiments where a WidowX robot performs the task of putting a fork inside a container, in a real office kitchen. We vary multiple environmental factors for this task, and evaluate whether learned policies generalize compositionally to unseen factor value combinations. Furthermore, we evaluate generalization to entirely new office kitchens that encompass unseen combinations of multiple factor values. We assess the impact of incorporating prior robotic datasets (specifically, BridgeData V2) on these forms of compositional generalization.

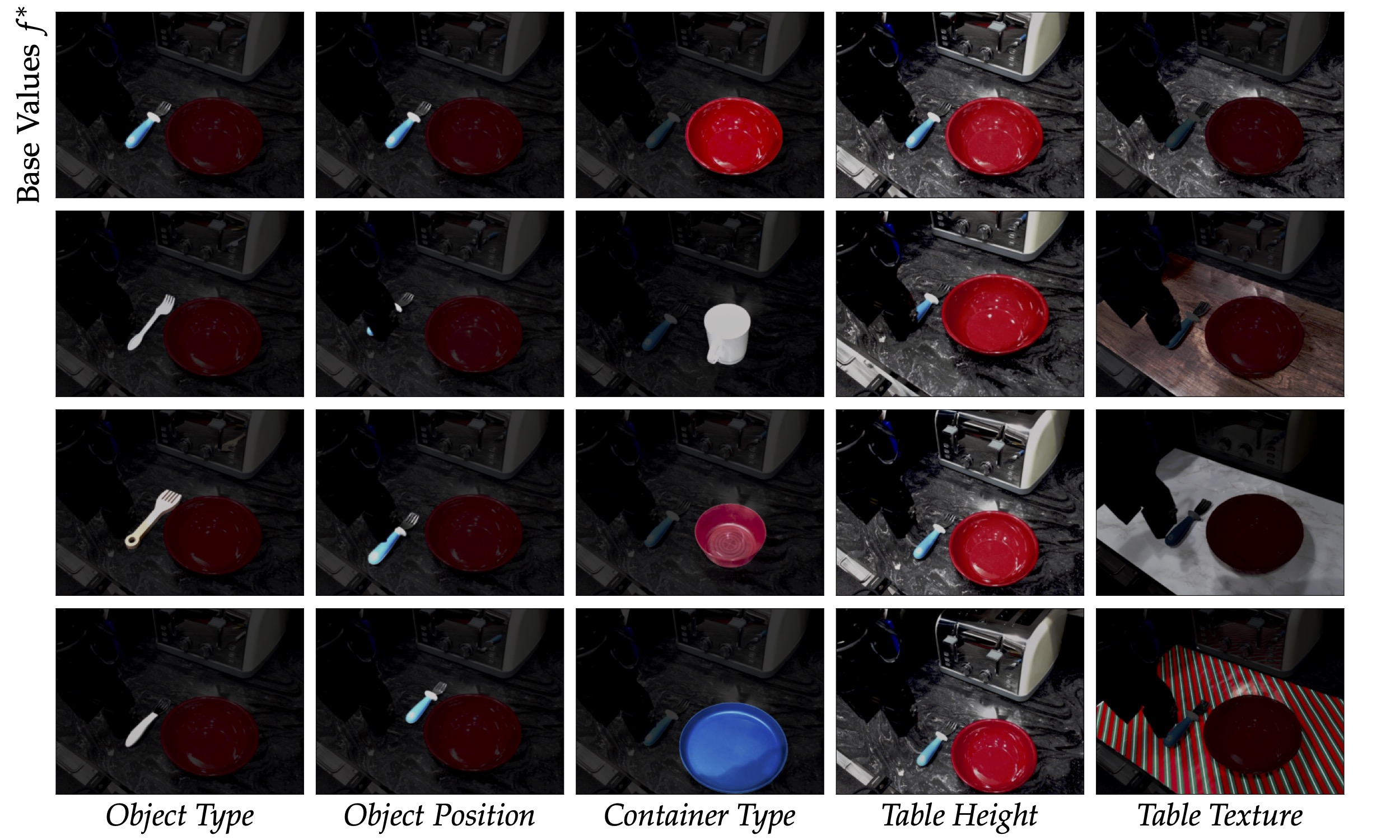

Environmental Factors

We consider the following factors: object (fork) type, object (fork) position, container type, table height, and table texture. Each factor has 4 factor values, and we designate one value per factor as part of our base factor values. Below, we visualize our task with base factor values (top row), and all deviations from this by one factor value.

Pairwise Composition

We collect varied in-domain data according to an L strategy that is intended to exploit compositional generalization (see paper for details). We find that when evaluated on unseen settings that require composing pairs of factor values from this data, a policy trained on this data and BridgeData V2 succeeds in 59/90 settings. On these same settings, a policy trained from scratch achieves a success rate of only 28/90, and a policy trained using BridgeData V2 but with no variation in its in-domain data achieves only 22/90. Below, we show examples where the policy trained on both L data and BridgeData V2 succeeds (right), while the other policies fail (left).

Factor Pair: Object Type + Container Type

L Data w/o Bridge (8x speed)

L Data w/ Bridge (8x speed)

Factor Pair: Container Type + Table Height

L Data w/o Bridge (8x speed)

L Data w/ Bridge (8x speed)

Factor Pair: Object Type + Table Texture

No Variation Data w/ Bridge (8x speed)

L Data w/ Bridge (8x speed)

Factor Pair: Object Position + Table Texture

No Variation Data w/ Bridge (8x speed)

L Data w/ Bridge (8x speed)

Out-of-Domain Transfer

We evaluate generalization to two entirely new office kitchens, which inherently have some completely out-of-distribution factor values from the original base kitchen where data is collected from. This includes factors that were accounted for during data collection (e.g., table texture) and factors that were not (e.g., distractor objects, lighting). We visualize the original kitchen (BaseKitch) and the two new kitchens (CompKitch, TileKitch) below.

BaseKitch

CompKitch

TileKitch

We show examples of transfer to the new kitchens below, enabled by collecting varied data in BaseKitch according to our proposed L strategy, and using prior robot data. We include examples with no further changes from the base factor values aside from those inherent to the new kitchens, and examples with additional factor shifts.

Transfer to CompKitch

No Further Changes (8x speed)

Object Type + Container Type (8x speed)

Transfer to TileKitch

No Further Changes (8x speed)

Object Position + Container Type (8x speed)

Failure Modes

Our policies were unable to compose factors in some situations, most often for factor combinations that interact together physically. We show examples of failures for two such factor combinations below.

Object Position + Table Height (8x speed)

Object Position + Container Type (8x speed)

Citation

The website template was borrowed from Jon Barron and RT-1