Emergent Behaviors in Human-Robot Systems

RSS 2020 Workshop

July 12, 2020

Oregon State University at Corvallis, Oregon, USA Virtual Event

- Contact Information

- ebiyik [at] stanford [dot] edu

Audience

This workshop will focus on emerging behaviors in human-robot systems. Hence, the intended audience includes, but is not limited to, the researchers who study human-robot interaction and multi-agent systems, with applications such as personal robots, assistive robots, and self-driving cars. The workshop will bring attention to several aspects regarding emergent behaviors: how they can be predicted, how they emerge and how we can best benefit from them. Bringing researchers together from various fields, the workshop will consist of interleaved talks between the speakers from different fields to encourage multidisciplinary discussion and interaction. Discussion and interaction will be further encouraged through break-out sessions, including a panel and debate, as well as the morning and afternoon coffee breaks.

This workshop may also be interesting to the learning community, since many recent developments in multi-agent reinforcement learning focus on how communication and coordination can autonomously emerge in robot teams. Besides, exploring how human agents develop conventions with robots has often served as an inspiration for learning algorithms. Hence, the workshop includes speakers focused on multi-agent learning.

Abstract

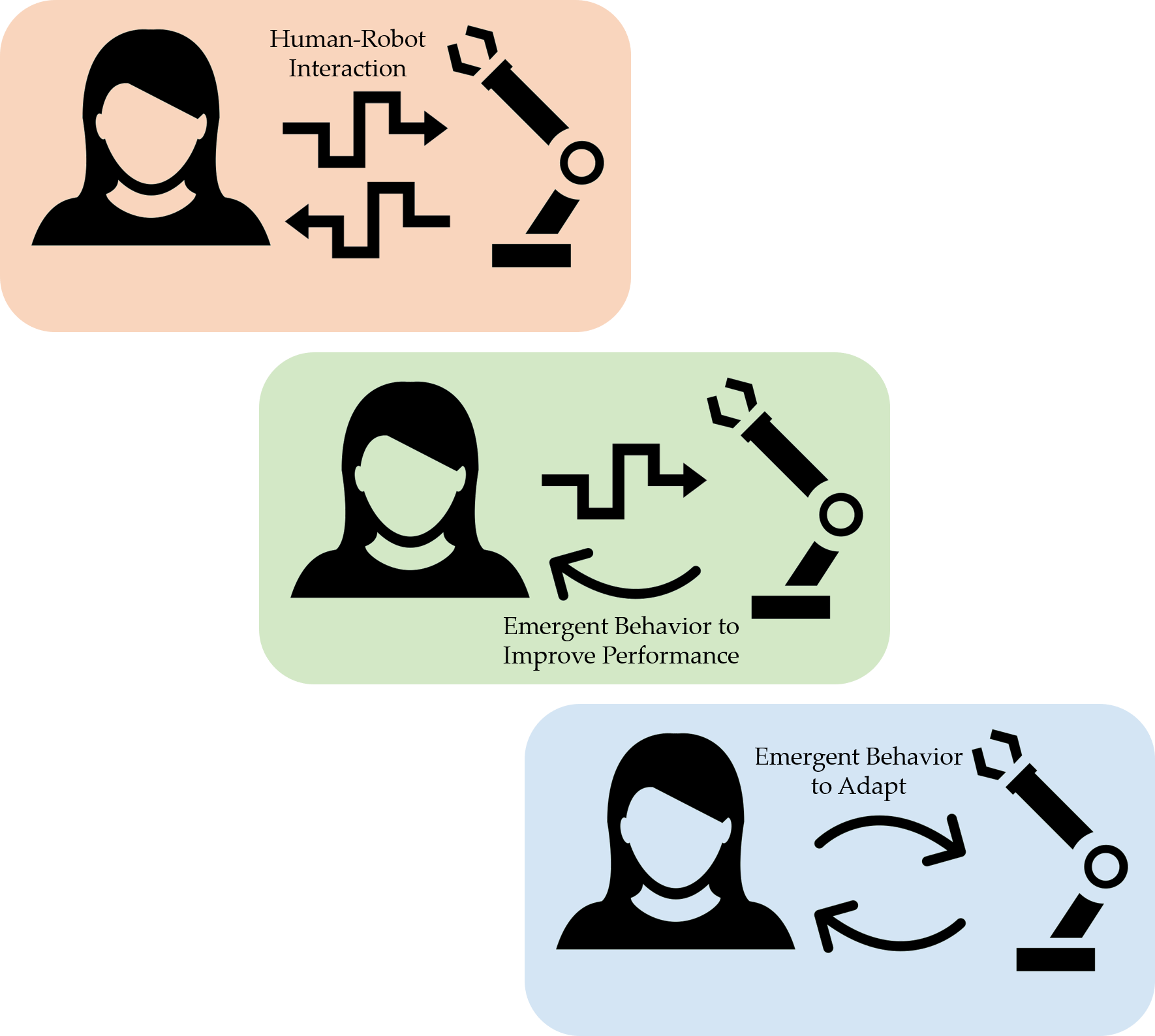

Robots are increasingly becoming members of our everyday community. Self-driving cars, robot teams, and social and assistive robots operate alongside human end-users to carry out various tasks. Similar to the conventions between humans, which are the low dimensional representations that capture the interaction and can change over time, emergent behaviors form as a result of repeated long-term interactions in multi-agent systems or human-robot teams. Unfortunately, these emergent behaviors are still not well understood. For instance, the robotics community has observed that many different, and often surprising, robot behaviors can emerge when robots are equipped with artificial intelligence and machine learning techniques. While some of these emergent behaviors are simply undesirable side-effects of misspecified objectives, many of them significantly contribute to the performance in the task and influence other agents in the environment. These behaviors can further lead to developing conventions and adaptation of other agents, who are possibly humans, by encouraging them to approach the task differently.

Goal. We want to investigate how complex and/or unexpected robot behaviors emerge in human-robot systems, and to understand how we can minimize their risks and maximize their benefits. This workshop promotes a discussion on

- How emergent behaviors form in human-robot, human-human, and robot-robot settings

- How we can predict emergent behaviors

- What types of emergent behaviors we would like to see in real-world applications

- How their negative consequences and risks might be alleviated

- How they can be better utilized for more efficient human-robot or multi-agent interaction

Speakers (PDF Version)

Brenna ArgallNorthwestern University |

Anca DraganUniversity of California, Berkeley |

Judith FanUniversity of California, San Diego |

Jakob FoersterFacebook AI & University of Toronto |

Robert D. HawkinsPrinceton University |

Maja MatarićUniversity of Southern California |

Negar MehrStanford University & University of Illinois Urbana-Champaign |

Igor MordatchGoogle Brain |

Harold SohNational University of Singapore |

Schedule

30-minute talks by the invited speakers are available on YouTube and linked below.

All times below are in Pacific Time (PT).

| 09:15 AM - 09:30 AM | RSS-wide Virtual Socializing Session |

| 09:30 AM - 10:30 AM | Panel (Speakers: Brenna Argall, Anca Dragan, Judith Fan, Jakob Foerster, Robert D. Hawkins, Maja Matarić, Igor Mordatch) |

| 10:30 AM - 11:00 AM | Spotlight Talks

|

| 11:15 AM - 11:30 AM | RSS-wide Virtual Socializing Session |

Recordings

Recordings are available on the YouTube playlist: https://www.youtube.com/playlist?list=PLALgrVO1YLmsXdaRKWtABqONtA81J0t2O.You can also use the interface below to browse and watch the recordings.

| Panel | |

| Spotlight Talks | |

| Anca Dragan | |

| Judith Fan | |

| Jakob Foerster | |

| Robert D. Hawkins | |

| Negar Mehr | |

| Harold Soh |

Organizers

-

Erdem Bıyık

Erdem Bıyık is a third year Ph.D. candidate in the Electrical Engineering department at Stanford. He is interested in enabling robots to learn from various forms of human feedback, and designing altruistic robot policies to improve the performance of multi-agent systems both in cooperative and competitive settings. Specifically, he works on developing learning algorithms for robots that actively query the humans to gain new abilities and on training the robot policies that influence the humans to get more cooperative, which in turn improves the payoff for all agents in the environment. Erdem was supported by the Stanford School of Engineering Fellowship.

-

Minae Kwon

Minae Kwon is a second year Ph.D. candidate in the Computer Science department at Stanford. She is broadly interested in enabling robots to intelligently interact with, influence, and adapt to humans. Specifically, she has worked on projects that include creating expressive robot motions, modeling and influencing multi-agent human teams, and enabling a robot to adapt to a human partner over repeated interactions using natural language. Minae has been supported by the Stanford School of Engineering Fellowship and Qualcomm Innovation Fellowship.

-

Dylan Losey

Dylan Losey is a postdoctoral scholar in Computer Science at Stanford University. His research interests lie at the intersection of human-robot interaction, learning from humans, and control theory. Specifically, he works on developing algorithms that enable robots to personalize their behavior and collaborate with human partners. Dylan received his Ph.D. in Mechanical Engineering from Rice University in 2018, his M.S. in Mechanical Engineering from Rice University in 2016, and his B.E. in Mechanical Engineering from Vanderbilt University in 2014. During the summer of 2017, Dylan was also a visiting scholar at the University of California, Berkeley. He has been awarded the IEEE/ASME Transactions on Mechatronics Best Paper Award, the Outstanding Ph.D. Thesis Award from the Rice University Department of Mechanical Engineering, and an NSF Graduate Research Fellowship.

-

Noah Goodman

Noah Goodman is an Associate Professor of Psychology and Computer Science at Stanford University, and a Senior Research Fellow at Uber AI Labs. He studies the computational basis of human and machine intelligence, merging behavioral experiments with formal methods from statistics and programming languages. His research topics include language understanding, social reasoning, concept learning, and causality. In addition he explores related technologies such as probabilistic programming languages and deep generative models. He has released open-source software including the probabilistic programming languages Church, WebPPL, and Pyro. Professor Goodman received his Ph.D. in mathematics from the University of Texas at Austin in 2003. In 2005 he entered cognitive science, working as Postdoc and Research Scientist at MIT. In 2010 he moved to Stanford where he runs the Computation and Cognition Lab. His work has been recognized by the J. S. McDonnell Foundation Scholar Award, the Roger N. Shepard Distinguished Visiting Scholar Award, the Alfred P. Sloan Research Fellowship in Neuroscience, and six computational modeling prizes from the Cognitive Science Society, several best paper awards, etc.

-

Stefanos Nikolaidis

Stefanos Nikolaidis is an Assistant Professor of Computer Science at the University of Southern California. His research focuses on the mathematical foundations of human-robot interaction, drawing upon expertise on machine learning, algorithmic game theory and decision making under uncertainty. His work leads to end-to-end solutions that enable deployed robotic systems to act optimally when interacting with people in practical, real-world applications. Previously, Stefanos completed his PhD at Carnegie Mellon's Robotics Institute and received his MS from MIT. He has also a MEng from the University of Tokyo and a BS from the National Technical University of Athens. Stefanos has worked as a research associate at the University of Washington, as a research specialist at MIT and as a researcher at Square Enix in Tokyo. His research has been recognized in the form of best paper awards and nominations from the IEEE/ACM International Conference on Human-Robot Interaction, the International Conference on Intelligent Robots and Systems and the International Symposium on Robotics.

-

Dorsa Sadigh

Dorsa Sadigh is an Assistant Professor in Computer Science and Electrical Engineering at Stanford University. Her research interests lie in the intersection of robotics, learning and control theory, and algorithmic human-robot interaction. Specifically, she works on developing efficient algorithms for autonomous systems that safely and reliably interact with people. Dorsa has received her doctoral degree in Electrical Engineering and Computer Sciences (EECS) at UC Berkeley in 2017, and has received her bachelor’s degree in EECS at UC Berkeley in 2012. She is awarded the Amazon Faculty Research Award, the NSF and NDSEG graduate research fellowships as well as the Leon O. Chua departmental award.