Physically Grounded Vision-Language Models for Robotic Manipulation

Abstract

Recent advances in vision-language models (VLMs) have led to improved performance on tasks such as visual question answering and image captioning. Consequently, these models are now well-positioned to reason about the physical world, particularly within domains such as robotic manipulation. However, current VLMs are limited in their understanding of the physical concepts (e.g., material, fragility) of common objects, which restricts their usefulness for robotic manipulation tasks that involve interaction and physical reasoning about such objects. To address this limitation, we propose PhysObjects, an object-centric dataset of 39.6K crowd-sourced and 417K automated physical concept annotations of common household objects. We demonstrate that fine-tuning a VLM on PhysObjects improves its understanding of physical object concepts, including generalization to held-out concepts, by capturing human priors of these concepts from visual appearance. We incorporate this physically grounded VLM in an interactive framework with a large language model-based robotic planner, and show improved planning performance on tasks that require reasoning about physical object concepts, compared to baselines that do not leverage physically grounded VLMs. We additionally illustrate the benefits of our physically grounded VLM on a real robot, where it improves task success rates.

PhysObjects Dataset

To benchmark and improve VLMs for object-centric physical reasoning, we compiled the PhysObjects dataset, which contains 39.6K crowd-sourced and 417K automated physical concept annotations. The source of our images is the EgoObjects dataset. We collected annotations for eight physical concepts, listed in the table below. We chose these concepts based on prior work and what we believe to be userful for robotic manipulation. However, we do not consider concepts that would be difficult for humans to estimate from only images, such as friction.

| Concept | Description |

|---|---|

| Mass | How heavy an object is |

| Fragility | How easily an object can be broken/damaged |

| Deformability | How easily an object can change shape without breaking |

| Material | What an object is primarily made of |

| Transparency | How much can be seen through an object |

| Contents | What is inside a container |

| Can Contain Liquid | If a container can be used to easily carry liquid |

| Is Sealed | If a container will not spill if rotated |

| Density (held-out) | How much mass per unit of volume of an object |

| Liquid Capacity (held-out) | How much liquid a container can contain |

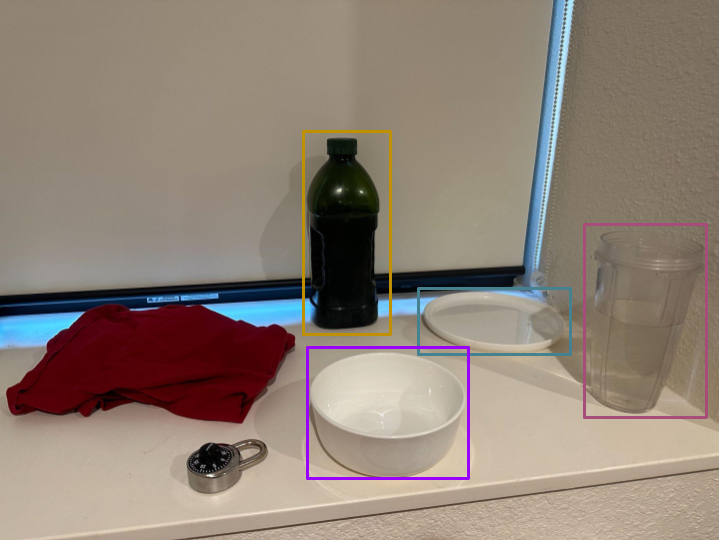

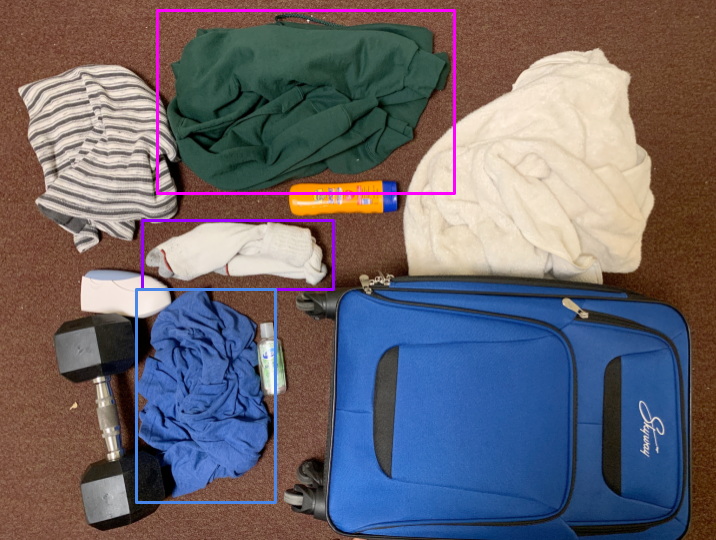

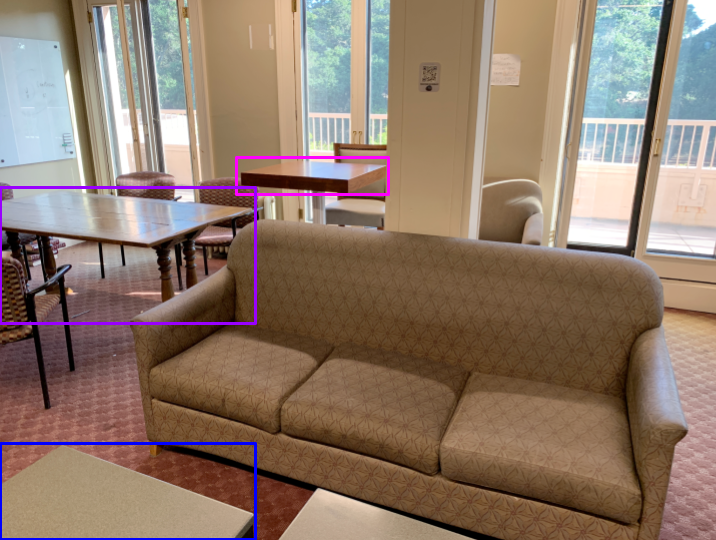

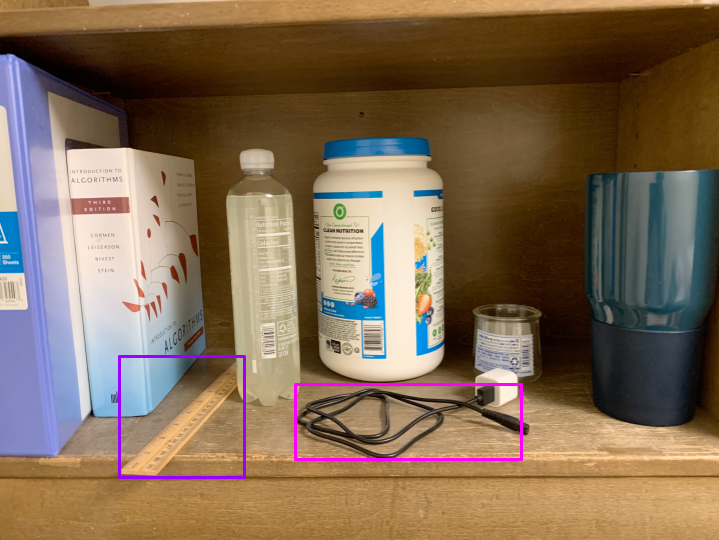

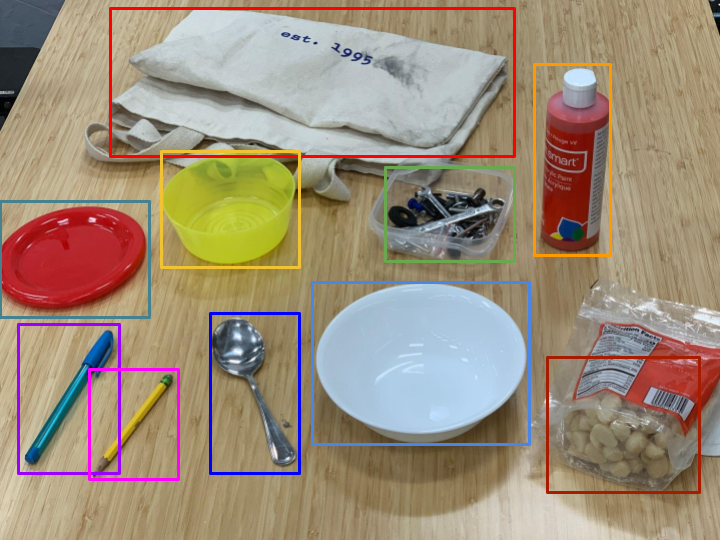

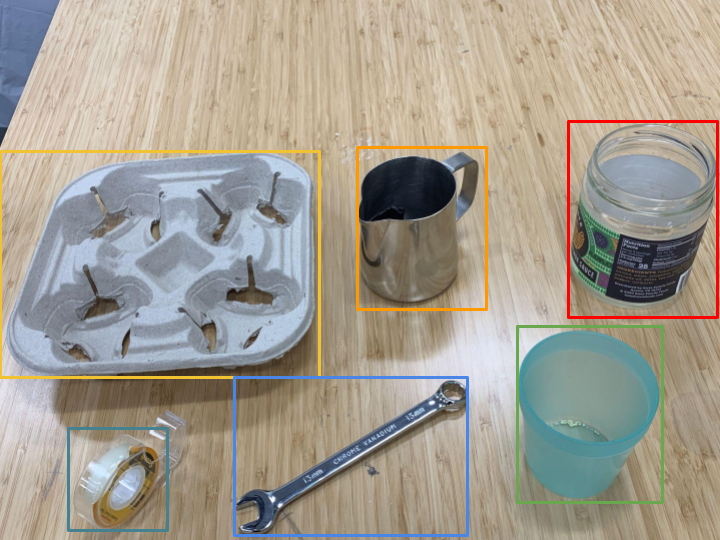

Real Scene Planning Evaluation

Click on a scene image to go to the planning results for a task in that scene.

Citation

The website template was borrowed from Jon Barron and RT-1