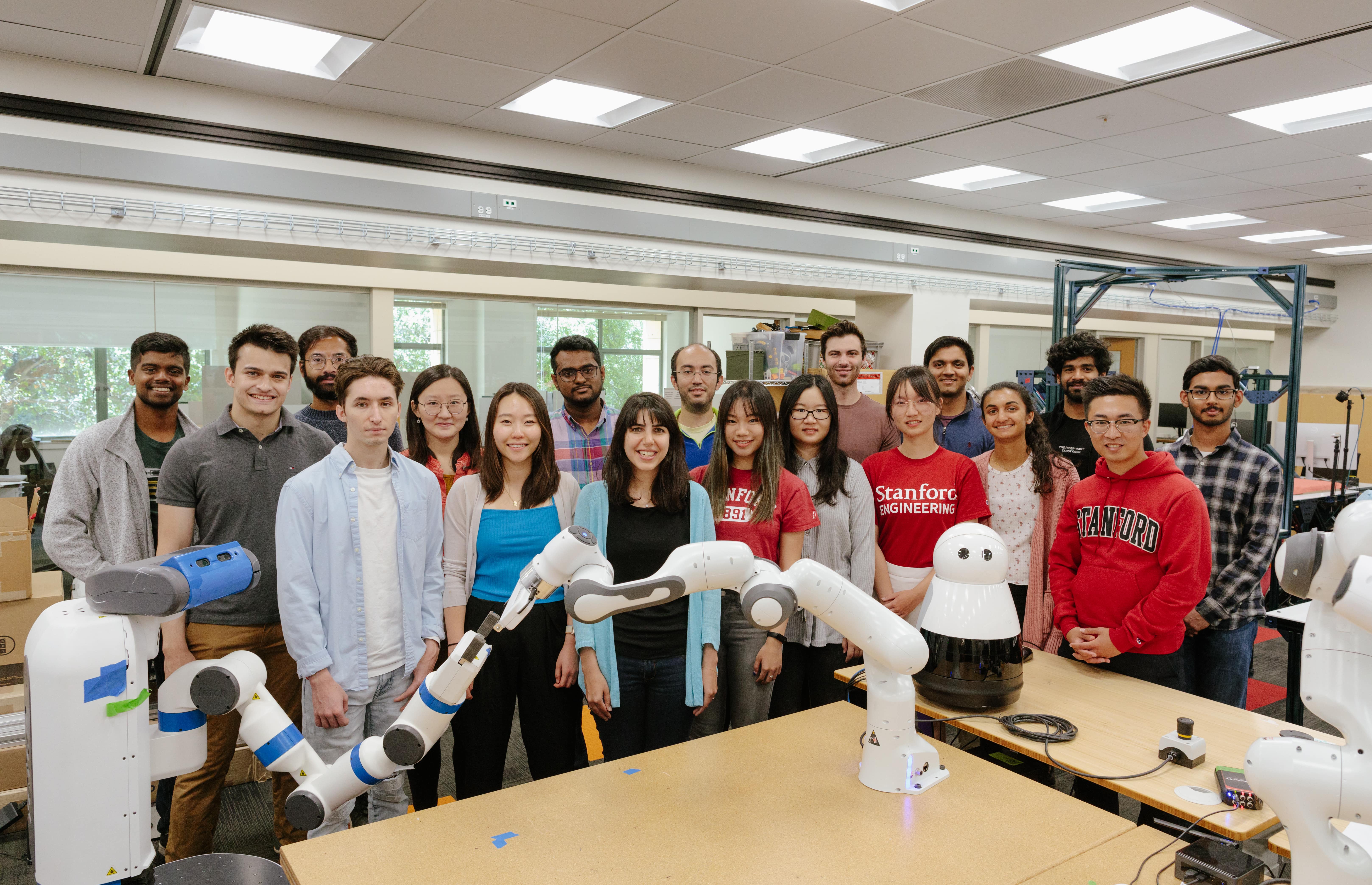

Stanford Intelligent and Interactive Autonomous Systems Group (ILIAD) develops algorithms for AI agents that safely and reliably interact with people. Our mission is to develop theoretical foundations for human-robot and human-AI interaction. Our group is focused on: 1) formalizing interaction and developing new learning and control algorithms for interactive systems inspired by tools and techniques from game theory, cognitive science, optimization, and representation learning, and 2) developing practical robotics algorithms that enable robots to safely and seamlessly coordinate, collaborate, compete, or influence humans.